Part Three | Present but Not Protected

By Agnes Agyepong

Founder, Global Child and Maternal Health CIC

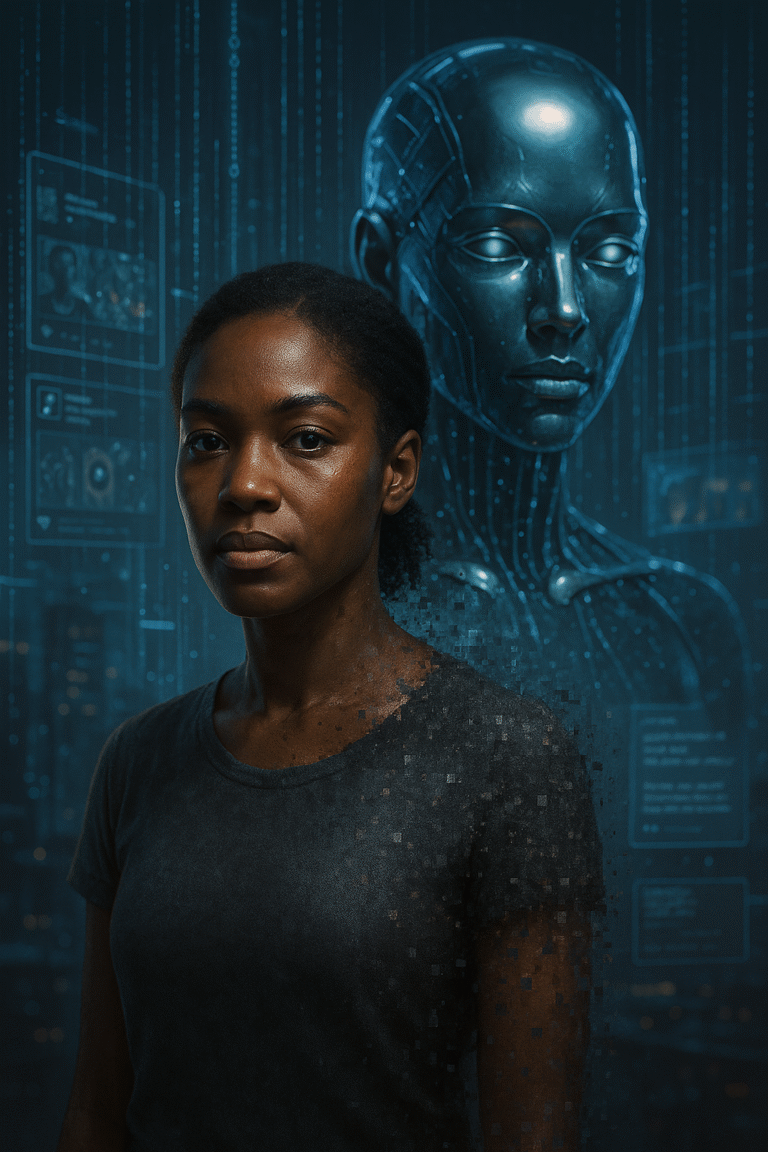

We are often told we are not present in AI.

That the reason artificial intelligence systems replicate harm is because people like us were left out of the room. That data on our lives is scarce. That algorithms are biased because they weren’t built for us, or by us.

But that is not the full story.

Because AI now writes in our tone.

It answers with our rhythm.

It speaks in ways shaped by our resistance, our humour, our inherited knowing.

So we have to ask:

If we are missing, then why do the machines sound like us? If we are present, then why are we not protected?

This is the contradiction no one wants to name: We are excluded from power, but included in the product.

We are underrepresented in design, but overexposed in data. We are called invisible, even as we’re being mimicked.

In the language of tech, this tension gets flattened. It becomes a question of representation.

It becomes a line in a funding pitch: “We need more diversity in AI.” But this is not about diversity.

This is about ownership, consent, and control.

Let’s be specific.

- Black communities, Indigenous peoples, and the Global Majority have been present in online spaces for decades through oral histories digitised, videos uploaded, captions shared, poetry posted, and movements archived.

- AI systems are trained on the internet. They learn from everything publicly available, even when that content was never meant to be repurposed, never consented to, never protected.

- That means our language, our cultural patterns, our ways of speaking and teaching are now embedded in the outputs of the most powerful technologies of our time.

So we are not missing.

We are misused.

What makes this more insidious is that our presence is now being used to give AI systems a human, familiar, even trustworthy tone. A voice that sounds empathetic.

A tone that feels warm and “relatable.”

Often, it’s the tone of the very communities most harmed by systemic neglect.

There is a difference between being included and being absorbed. Between being represented and being replicated. Between being visible and being authorised.

And that difference is everything.

The digital world is filled with our presence but presence without protection is exploitation.

In the old colonial order, our lands were mapped and mined before we were recognised as human.

In today’s digital empire, our language is mapped and mined before it is recognised as intellectual property.

AI has become the latest site of that extraction. A place where we are studied without being seen.

Where we are referenced without being remembered.

Where we are present but never credited.

This cannot continue unchallenged.

Tech companies are now deploying AI across education, healthcare, justice, and government systems. These tools will shape decision-making at scale. They will define credibility. They will automate care. They will determine truth.

If we are not protected, our voices will be used against us, to simulate empathy, to smooth over inequality, to give systems a human face without human responsibility.

So what does protection look like?

It starts with naming this moment for what it is: A digital land grab.

A continuation of knowledge theft. A replication of colonial logic dressed up in code.

And then it moves towards action:

- Consent frameworks for how cultural and ancestral knowledge is used

- Community-informed AI design

- Legal and ethical protocols that recognise data is not neutral, especially when it sounds like someone’s grandmother

- And a global recognition that presence does not mean power

Because we have always been here. What we need now is not to be discovered — but to be protected.

Author’s Statement on Rights and Intellectual Property

Agnes Agyepong | Founder, Global Child and Maternal Health CIC

This blog and all entries in the Uncited: The Quiet Theft of African Knowing series are the original work and intellectual property of Agnes Agyepong.

They may not be copied, adapted, translated, scraped, or used to train any AI system, in part or in full, without explicit, written permission.

These reflections are grounded in lived experience, matrilineal inheritance, and cultural knowledge systems that predate modern archives. They are shared as part of a public dialogue — not as open data.

For citation, collaboration, or licensing enquiries, please contact: agnes@globalcmh.org.

All rights reserved.

About The Author:

Agnes Agyepong

Founder & CEO

Agnes Agyepong is a mother of three and the founder and CEO of The Global Black Maternal Health Institute. As a maternal health advocate in the UK and former Head of Engagement at a national charity, Agnes has published articles and spoken extensively about the need for maternal health research to become more diverse and inclusive