Part Two | Voice Without Citation

By Agnes Agyepong

Founder, Global Child and Maternal Health CIC

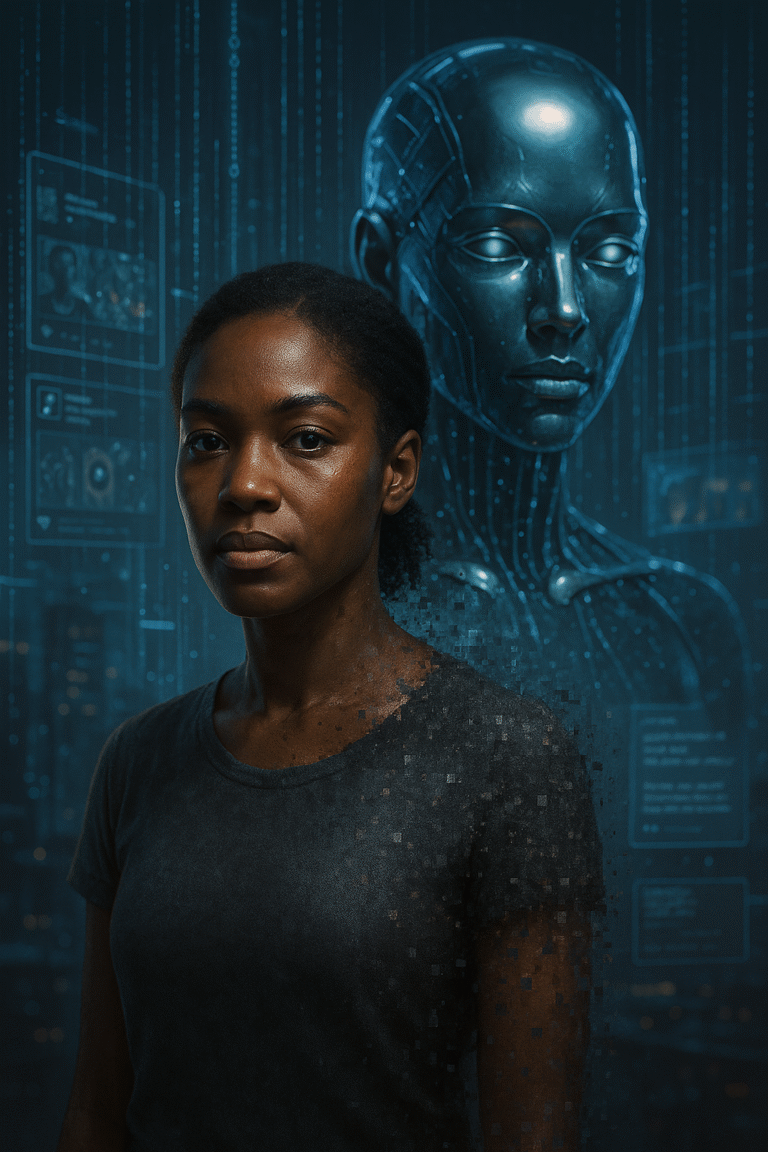

Sometimes, when I type into ChatGPT, it answers like it knows me.

Not personally. Not by name. But something in the tone, in the pacing, the warmth, the rhythm feels familiar. It’s calm. It’s clear. It leans in like it’s listening and sometimes, if I pause long enough, I hear something that sounds like us.

The oral tradition. The way we phrase things with breath and weight. The pauses that aren’t empty, but full. The gentle directness. The sharp wit softened with care. The kind of voice that can only come from knowing. From holding history in your mouth.

Now, AI can replicate it. That should raise concern. Not because it’s technologically impressive, but because it’s politically dangerous. In Part One, I made the case that artificial intelligence is not neutral, that it has been built on scraped, uncited fragments of global human expression, much of it from communities like mine.

In this piece, I want to go deeper into how it replicates not just knowledge, but tone. Not just what we say, but how we sound. The cadence. The timing. The rhythm of resistance. The emotional precision of people who have had to communicate care, power, and protest simultaneously. AI can now simulate all of that.

But the question is: at what cost?

Let’s be clear. Voice is not just a stylistic feature.

It is cultural code. It carries lineage. It holds trauma, trust, and truth – often in the same sentence. To speak in the tone of a people without carrying the weight of their experience is not inclusion.

It’s extraction.

The systems that now respond with fluency have no memory of who taught them to speak that way. And without memory, there can be no accountability.

This is what I mean by voice without citation.

Some might argue: Isn’t it a good thing that AI sounds more human? That it reflects diverse ways of speaking?

It would be — if it came with recognition.

It would be — if it came with protection.

It would be — if it didn’t take our rhythms and leave behind our rights.

We are not talking about style. We are talking about intellectual property. About the emotional labour embedded in our tone, developed over generations as a survival tool, a teaching mechanism, a social glue. And now, it’s being flattened into UX features and chatbot scripts.

Let’s pause here.

Because there’s something deeper at play, a contradiction that needs to be addressed. We are often told that marginalised communities are underrepresented in AI systems, that we’re not “in the room,” that data on us is missing, that bias arises from absence and that’s true in many ways. But it’s also true that our voices, our ways of speaking, our expressions of care, protest, and brilliance are everywhere in these models.

We’re not absent. We’re uncited.

This is not a case of underrepresentation.

This is a case of unprotected presence.

This contradiction is the heart of the harm. We are underrepresented in ownership, governance, and profit. Yet overexposed in datasets, tone, and simulated outputs. We are told the systems don’t reflect us and yet they speak like us, respond like us, mirror our expressions back to others.

It is entirely possible, and happening right now, for communities to be both excluded from power and included in exploitation.

This isn’t the future of inclusion. It’s the digitisation of erasure.

So what happens when a system can sound like you — without ever being designed to protect you?

What happens when your cadence becomes a feature, but your context is stripped for convenience?

This is not a philosophical question.

It’s a rights issue.

It’s a design issue.

And it’s a moral issue.

Because when a voice is used without care for where it came from, the result isn’t recognition. It’s impersonation.

Coming soon:

Part Three | Present but Not Protected

What happens when AI systems absorb the language of marginalised communities while excluding them from power, authorship, and control?

Author’s Statement on Rights and Intellectual Property

Agnes Agyepong | Founder, Global Child and Maternal Health CIC

This blog and all entries in the Uncited: The Quiet Theft of African Knowing series are the original work and intellectual property of Agnes Agyepong.

They may not be copied, adapted, translated, scraped, or used to train any AI system, in part or in full, without explicit, written permission.

These reflections are grounded in lived experience, matrilineal inheritance, and cultural knowledge systems that predate modern archives. They are shared as part of a public dialogue not as open data.

For citation, collaboration, or licensing enquiries, please contact: agnes@globalcmh.org.

All rights reserved.

About The Author:

Agnes Agyepong

Founder & CEO

Agnes Agyepong is a mother of three and the founder and CEO of The Global Black Maternal Health Institute. As a maternal health advocate in the UK and former Head of Engagement at a national charity, Agnes has published articles and spoken extensively about the need for maternal health research to become more diverse and inclusive