Part One | The Machine Is Not Neutral

By Agnes Agyepong

Founder, Global Child and Maternal Health CIC

I was born and raised in London, educated in its systems, shaped by its language, and politicised by its silences. But my way of knowing was never just British. It was African before I had the words for it.

That knowing wasn’t written. It was remembered. Passed through my matrilineal line. Held in gestures, warnings, prayers, and patterns. It didn’t need to be cited because it was lived.

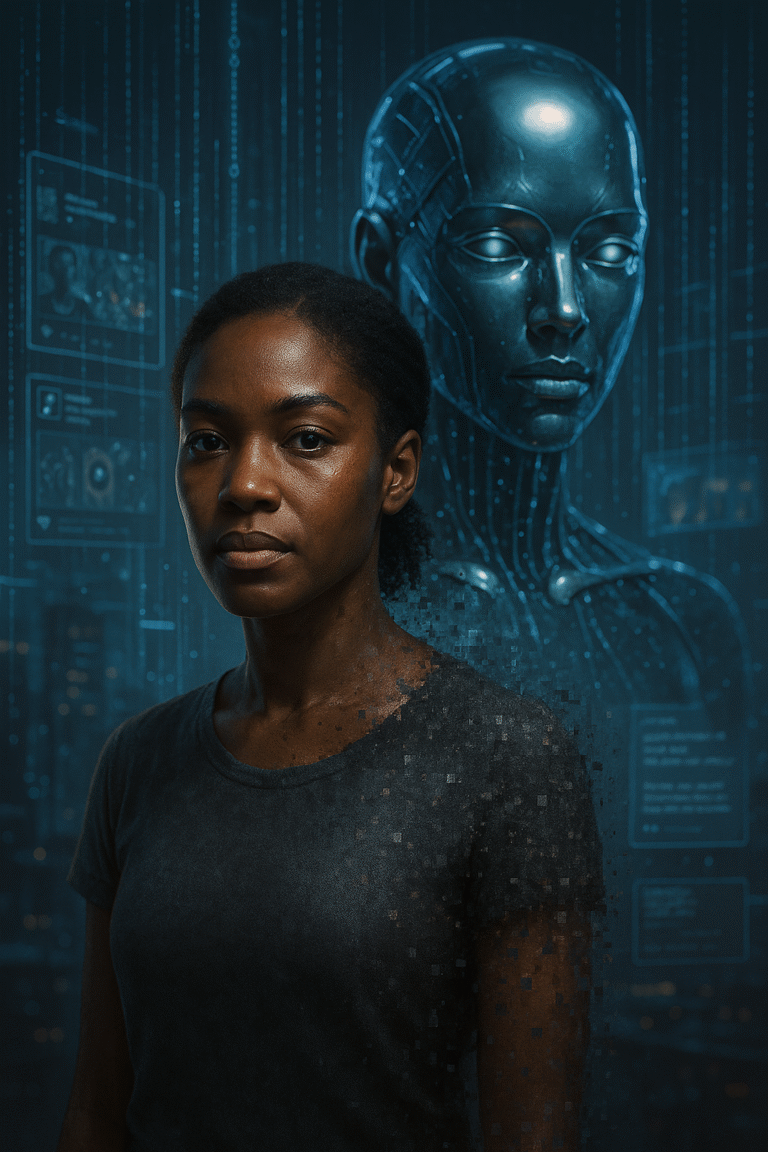

Now, I find myself face to face with artificial intelligence. Platforms like ChatGPT, trained on billions of fragments of human expression, designed to “know” everything and speak with confidence about anything. And I recognise something unsettling: its fluency feels familiar.

It responds in ways I’ve heard before, not just because of how much it’s been trained on, but who it’s been trained on.

We are told that AI is neutral.

That it reflects the world. But the world it reflects is one shaped by extraction.

For generations, anthropologists entered African communities and recorded our knowledge – our philosophies, rituals, healing systems, governance structures, not to preserve them for us but to study them for others. They renamed our wisdom “culture.” Turned our cosmologies into field notes. Placed our knowledge systems under the microscope, then published their findings with their own names on the cover.

Today, AI continues that legacy

Not with notebooks and voice recorders, but with scraping tools and training sets .It pulls from texts, videos, prayers, posts – language that lives in rhythm, urgency, and resistance.

Language born from lives like mine and yet, when it responds, it offers no memory of where that language came from. It is fluent. But it is not faithful. It sounds like us, but does not know us. It reflects our tone, but erases our context.

This is not a fear of technology. This is a call for memory.

Because I know

What happens when knowledge is used without acknowledgment.

It is used against us, or without us. Or in rooms we are never invited into, until someone needs to “diversify” the panel.

But this is different. Because AI doesn’t just borrow language. It reshapes the archive of the world, training itself on material that was never meant to be data, material that was sacred, inherited, embodied.

We are not entering a new phase

Where knowledge becomes the next frontier of colonialism, knowledge was the first frontier.

From the moment of contact, colonial power was built on the denial, distortion, and theft of Indigenous and African ways of knowing. Anthropologists, missionaries, and empire-builders didn’t just take land, they took language, cosmology, story, and science.

They replaced living epistemologies with categories. They turned libraries like those in Timbuktu into historical footnotes, while elevating Aristotle and the European canon as the beginning of thought.

And now, in the age of AI, that same theft is being automated. What once required a voyage and a notebook can now happen in seconds, scraping sacred and ancestral knowledge from the internet without credit or accountability.

This isn’t just about memory. It’s about rights.

The UN Declaration on the Rights of Indigenous Peoples (Article 31) clearly states that Indigenous peoples have the right to maintain, control, and protect their cultural heritage, knowledge systems, and intellectual property. Especially from commercial and technological misuse.

The UNESCO Recommendation on the Ethics of Artificial Intelligence (2021) calls for AI to be built in ways that respect cultural diversity, human dignity, and non-discrimination — and to prevent historical injustices from being digitised into future systems.

But if AI can be trained on our ways of knowing without consent, transparency, or even acknowledgment – then those documents mean little.

We must demand more than ethics. We must demand accountability.

AI is here to stay.

By 2030, it’s estimated that over 20% of global jobs will be lost to automation. We are in the midst of the Fourth Industrial Revolution, and the systems being built today will shape how billions live, work, and learn.

This is not a call to stop. It’s a call to pause. To interrogate. To ensure that the future of intelligence does not repeat the sins of the past.

We must build systems with memory. With ceremony. With due process.

And we must protect the wisdom that has survived so much. Even when it is not cited.

I’m not writing as a technologist.

I’m writing as someone who knows what it feels like to be referenced without being recognised.

To have your tone used, but your truth erased.

This isn’t about nostalgia. It’s about sovereignty.

It’s about what we do with the knowledge we carry, when we realise the world has already taken pieces of it.

And the next time someone asks, “Where did the machine learn that?” I want us to remember: before there were training sets, we were teaching.

And what we know cannot be scraped.

Author’s Statement on Rights and Intellectual Property

Agnes Agyepong | Founder, Global Child and Maternal Health CIC

This blog and all entries in the Uncited: The Quiet Theft of African Knowing series are the original work and intellectual property of Agnes Agyepong.

They may not be copied, adapted, translated, scraped, or used to train any AI system, in part or in full, without explicit, written permission.

These reflections are grounded in lived experience, matrilineal inheritance, and cultural knowledge systems that predate modern archives. They are shared as part of a public dialogue – not as open data.

For citation, collaboration, or licensing enquiries, please contact: agnes@globalcmh.org.

All rights reserved.

About The Author:

Agnes Agyepong

Founder & CEO

Agnes Agyepong is a mother of three and the founder and CEO of The Global Black Maternal Health Institute. As a maternal health advocate in the UK and former Head of Engagement at a national charity, Agnes has published articles and spoken extensively about the need for maternal health research to become more diverse and inclusive